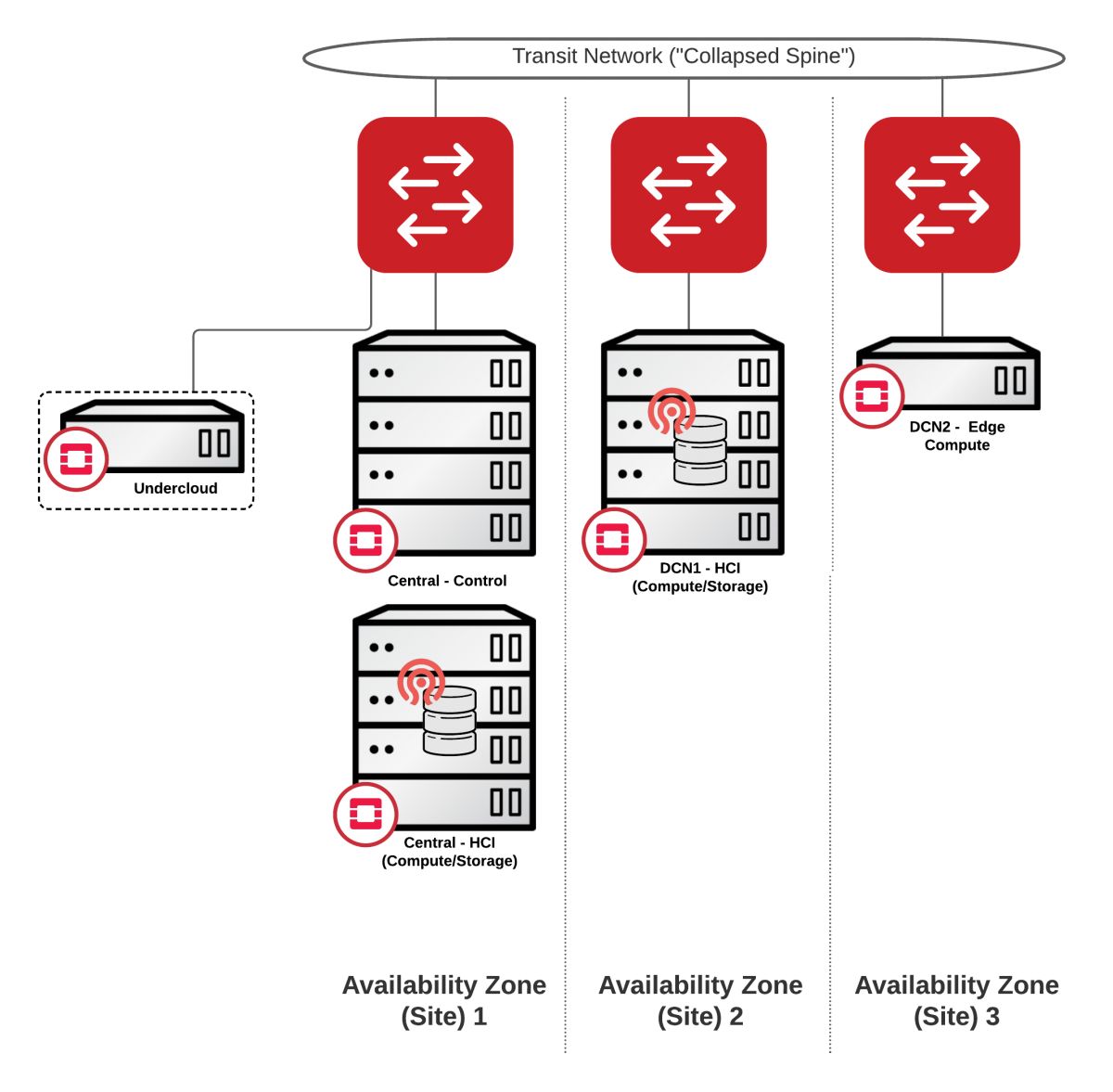

I have hesitated to post anything about Red Hat OpenStack Edge since it got introduced in OSP13 simply because I found it quite difficult to consume. Also the storage situation back then was .. not complete. Things improved overtime and now with OSP16.1 we can deploy our private cloud with the following features (which imho finally makes it production ready.):

– ceph storage at the edge / dcn

– one stack per site for better management and lifecycle

– improved routed networking configuration

– image caching at the edge

So why would anyone do this? I find 3 distinctive benefits:

1. Simplified Management and Lifecycle – of rather complex architectures

2. Efficiency of distributed compute/storage and no control overhead

3. High availability and high performance at the edge

And for once, I don’t know of any other Infrastructure software that could do it in a supportable way.

Demo:

So now we know why. Let’s focus on how.

I. Official Documents

I have been referencing 3 separate documents to come out with my architecture:

DCN Docs:

Leaf Spine Docs:

Upstream DCN Docs:

II. Relevant artifacts from my lab:

1. Deployment scripts:

(undercloud) [stack@chrisj-dcn2-undercloud ~]$ cat deploy-central.sh

#!/bin/bash

source ~/stackrc

cd ~/

time openstack overcloud deploy –templates –stack chrisjdcn-central \

-n templates/network_data_spine_leaf.yaml \

-r templates/central_roles.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-ansible.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-environment.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/services/neutron-ovs.yaml \

-e templates/node-info.yaml \

-e templates/ceph-custom-config.yaml \

-e templates/network-environment.yaml \

-e templates/spine-leaf-ctlplane.yaml \

-e templates/spine-leaf-vips.yaml \

-e templates/host-memory.yaml \

-e templates/site-name.yaml \

-e templates/inject-trust-anchor-hiera.yaml \

-e templates/containers-prepare-parameter.yaml \

-e templates/glance_update.yaml \

-e templates/dcn_ceph.yaml \

–log-file chrisj-dcn_deployment.log \

–ntp-server 10.10.0.10

(undercloud) [stack@chrisj-dcn2-undercloud ~]$ cat deploy-dcn1.sh

#!/bin/bash

source ~/stackrc

cd ~/

time openstack overcloud deploy –templates –stack chrisj-dcn1 \

-n templates/network_data_spine_leaf.yaml \

-r templates/dcn1/dcn1_roles.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-ansible.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-environment.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/services/neutron-ovs.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/dcn-hci.yaml \

-e templates/host-memory.yaml \

-e templates/dcn1/site-name.yaml \

-e dcn-common/central-export.yaml \

-e dcn-common/central_ceph_external.yaml \

-e templates/dcn1/tuning.yaml \

-e templates/dcn1/glance.yaml \

-e templates/inject-trust-anchor-hiera.yaml \

-e templates/containers-prepare-parameter.yaml \

-e templates/dcn1/dcn1-images-env.yaml \

-e templates/dcn1/node-info.yaml \

-e templates/dcn1/ceph.yaml \

-e templates/network-environment.yaml \

-e templates/spine-leaf-ctlplane.yaml \

-e templates/spine-leaf-vips.yaml \

–log-file chrisj-dcn_deployment.log \

–ntp-server 10.10.0.10

(undercloud) [stack@chrisj-dcn2-undercloud ~]$ cat deploy-dcn2.sh

#!/bin/bash

#############################

# This is not fully dynamic file and it might have not been populated with all right information. This is a template. You might still want to verify this is what you want before executing it

##############################

source ~/stackrc

cd ~/

time openstack overcloud deploy –templates –stack chrisj-dcn2 \

-n templates/network_data_spine_leaf.yaml \

-r templates/dcn2/dcn2_roles.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-environment.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/services/neutron-ovs.yaml \

-e templates/dcn2/site-name.yaml \

-e dcn-common/central-export.yaml \

-e templates/inject-trust-anchor-hiera.yaml \

-e templates/containers-prepare-parameter.yaml \

-e templates/dcn2/node-info.yaml \

-e templates/network-environment.yaml \

-e templates/spine-leaf-ctlplane.yaml \

-e templates/spine-leaf-vips.yaml \

–log-file chrisj-dcn2_deployment.log \

–ntp-server 10.10.0.10

2. Central location yaml files

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat node-info.yaml

parameter_defaults:

OvercloudControllerFlavor: control

OvercloudComputeFlavor: compute

OvercloudComputeLeaf1Flavor: compute-leaf1

OvercloudComputeLeaf2Flavor: compute-leaf2

ComputeLeaf1Count: 0

ComputeLeaf2Count: 0

ControllerCount: 3

ComputeCount: 3

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat ceph-custom-config.yaml

parameter_defaults:

CephAnsibleDisksConfig:

devices:

– /dev/vdb

osd_scenario: lvm

osd_objectstore: bluestore

CephPoolDefaultPgNum: 16

CephPoolDefaultSize: 1

CephClusterName: central

CephAnsibleExtraConfig:

public_network: ‘10.40.0.0/24,10.40.1.0/24’

cluster_network: ‘10.50.0.0/24,10.50.1.0/24’

GlanceEnabledImportMethods: web-download,copy-image

GlanceBackend: rbd

GlanceStoreDescription: ‘central rbd glance store’

GlanceBackendID: central

CephClusterName: central

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat network-environment.yaml

resource_registry:

OS::TripleO::Compute::Net::SoftwareConfig:

./nic-config/compute-hci.yaml

OS::TripleO::ComputeLeaf1::Net::SoftwareConfig:

./nic-config/compute-hci-leaf1.yaml

OS::TripleO::ComputeLeaf2::Net::SoftwareConfig:

./nic-config/compute-leaf2.yaml

OS::TripleO::Controller::Net::SoftwareConfig:

./nic-config/controller.yaml

OS::TripleO::CephStorage::Net::SoftwareConfig:

./nic-config/ceph-storage.yaml

parameter_defaults:

DnsServers: [“10.9.71.7″,”8.8.8.8”]

NeutronFlatNetworks: ‘datacentre,provider0,provider1,provider2’

ControllerParameters:

NeutronBridgeMappings: “datacentre:br-ex,provider0:br-provider”

ComputeParameters:

NeutronBridgeMappings: “provider0:br-provider”

ComputeLeaf1Parameters:

NeutronBridgeMappings: “provider1:br-provider”

ComputeLeaf2Parameters:

NeutronBridgeMappings: “provider2:br-provider”

BondInterfaceOvsOptions: “bond_mode=active-backup”

TimeZone: ‘US/Eastern’

NtpServer: 10.10.0.10

NeutronEnableIsolatedMetadata: true

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat spine-leaf-ctlplane.yaml

parameter_defaults:

ControllerControlPlaneSubnet: leaf0

ComputeControlPlaneSubnet: leaf0

ComputeLeaf1ControlPlaneSubnet: leaf1

ComputeLeaf2ControlPlaneSubnet: leaf2

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat spine-leaf-vips.yaml

parameter_defaults:

VipSubnetMap:

ctlplane: leaf0

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat site-name.yaml

parameter_defaults:

NovaComputeAvailabilityZone: central

ControllerExtraConfig:

nova::availability_zone::default_schedule_zone: central

NovaCrossAZAttach: false

CinderStorageAvailabilityZone: central

GlanceBackendID: central

3. DCN1 location yaml files

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat dcn1/ceph.yaml

parameter_defaults:

CephAnsibleDisksConfig:

devices:

– /dev/vdb

osd_scenario: lvm

osd_objectstore: bluestore

CephPoolDefaultPgNum: 16

CephPoolDefaultSize: 1

CephClusterName: dcn1

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat dcn1/glance.yaml

parameter_defaults:

GlanceEnabledImportMethods: web-download,copy-image

GlanceBackend: rbd

GlanceStoreDescription: ‘dcn1 rbd glance store’

GlanceBackendID: dcn1

GlanceMultistoreConfig:

central:

GlanceBackend: rbd

GlanceStoreDescription: ‘central rbd glance store’

CephClientUserName: ‘openstack’

CephClusterName: central

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat dcn1/node-info.yaml

parameter_defaults:

ControllerCount: 0

ComputeCount: 0

OvercloudControllerFlavor: control

OvercloudComputeFlavor: compute

OvercloudComputeLeaf1Flavor: compute-leaf1

OvercloudComputeLeaf2Flavor: compute-leaf2

ComputeLeaf1Count: 3

ComputeLeaf2Count: 0

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat dcn1/site-name.yaml

parameter_defaults:

NovaComputeAvailabilityZone: dcn1

NovaCrossAZAttach: false

CinderStorageAvailabilityZone: dcn1

CinderVolumeCluster: dcn1

ComputeLeaf1ExtraConfig:

neutron::agents::dhcp::availability_zone: ‘dcn1’

4. DCN2 location yaml files

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat dcn2/node-info.yaml

parameter_defaults:

ControllerCount: 0

ComputeCount: 0

OvercloudControllerFlavor: control

OvercloudComputeFlavor: compute

OvercloudComputeLeaf1Flavor: compute-leaf1

OvercloudComputeLeaf2Flavor: compute-leaf2

ComputeLeaf1Count: 0

ComputeLeaf2Count: 1

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat dcn2/site-name.yaml

parameter_defaults:

NovaComputeAvailabilityZone: dcn2

NovaCrossAZAttach: false

RootStackName: dcn2

ManageNetworks: false

ComputeLeaf2ExtraConfig:

neutron::agents::dhcp::availability_zone: ‘dcn2’

5. YAML files added to central location post DCN deployment

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat glance_update.yaml

parameter_defaults:

GlanceEnabledImportMethods: web-download,copy-image

GlanceBackend: rbd

GlanceStoreDescription: ‘central rbd glance store’

GlanceBackendID: central

CephClusterName: central

GlanceMultistoreConfig:

dcn1:

GlanceBackend: rbd

GlanceStoreDescription: ‘dcn1 rbd glance store’

CephClientUserName: ‘openstack’

CephClusterName: dcn1

GlanceBackendID: dcn1

(undercloud) [stack@chrisj-dcn2-undercloud templates]$ cat dcn_ceph.yaml

parameter_defaults:

CephExternalMultiConfig:

– ceph_conf_overrides:

client:

keyring: /etc/ceph/dcn1.client.openstack.keyring

cluster: dcn1

dashboard_enabled: false

external_cluster_mon_ips: 10.40.1.82,10.40.1.141,10.40.1.158

fsid: secret

keys:

– caps:

mgr: allow *

mon: profile rbd

osd: profile rbd pool=vms, profile rbd pool=volumes, profile rbd pool=images

key: secret

mode: ‘0600’

name: client.openstack

III. Summary

I must admit that I had a lot of fun exploring the Edge/DCN capabilities in OSP 16.1. Getting to the MVP was not easy mostly due to lack of or incomplete documentation, but once I got it fully up it was “no brainer” to add or adjust it’s features.

I hope this additional documentation helps someone get it up in their private cloud. It’s definitely worth the try.