Another day, another “Edge” Architecture. This time let’s see how the minimum all-in-one OCP/OCS/CNV would have to look like.

But first what are the key benefits:

- Small footprint

- High Availability

- Virtualization with VM HA

- HCI storage

- Best containers platform there is

Okay, I got you this far, let me reward you now with a short 2 minutes demo on how this is going to work once you’re done: https://www.youtube.com/embed/8Xe1bJIda3c

I have to warn you, this is not a fully approved/tested setup and you would likely need a support exception if you wanted Red Hat to support you on this design.

Also don’t one in all-in-one be misleading. We really need to start with 4 hosts (1x bootstrap and 3x masters/workers). By the end of this example we get to run it all on just 3 nodes.

Red Hat official documentation:

https://openshift-kni.github.io/baremetal-deploy/4.7/Deployment

Prerequisites:

You need to start with pre-defining your hardware. This is a minimum spec that worked for me:

| Role | RAM | CPU | NIC | Disk |

| 1x Bootstrap | 20G | 6 | 1x pxe 1x baremetal | 100GB |

| 3x Master/worker | 52GB | 24 | 1x pxe 1x baremetal | 150GB +250GB |

Bootstrap Configuration

- Log into Bootstrap machine (I configured my based on registered RHEL8 with dedicated kni user)

ssh kni@<ip-learned-from-horizon>

- Updating system

[kni@bootstrap ~]$ sudo yum update -y

Query to see if bootstrap node needs restarted:

| [kni@bootstrap ~]$ needs-restarting -r Core libraries or services have been updated since boot-up: * dbus * dbus-daemon * kernel * linux-firmware Reboot is required to fully utilize these updates. More information: https://access.redhat.com/solutions/27943 ** needs-restarting is part of the yum-utils package |

[kni@bootstrap ~]$ sudo reboot (if needed ^)

- Installing the KNI Packages

[kni@bootstrap ~]$ sudo dnf install -y libvirt qemu-kvm mkisofs python3-devel jq ipmitool

—

- Modify the user to add the libvirt group to the newly created user.

[kni@bootstrap ~]$ sudo usermod –append –groups libvirt kni

- Start and enable libvirtd

[kni@bootstrap ~]$ sudo systemctl enable libvirtd –now

- Create the default storage pool and start it.

[kni@bootstrap ~]$ sudo virsh pool-define-as –name default –type dir –target /var/lib/libvirt/images

Pool default defined

[kni@bootstrap ~]$ sudo virsh pool-start default

Pool default started

[kni@bootstrap ~]$ sudo virsh pool-autostart default

Pool default marked as autostarted

- Set up networking

export PROV_CONN=”eth1″

sudo nmcli con down “$PROV_CONN”

sudo nmcli con delete “$PROV_CONN”

sudo nmcli con down “System $PROV_CONN”

sudo nmcli con delete “System $PROV_CONN”

sudo nmcli connection add ifname provisioning type bridge con-name provisioning

sudo nmcli con add type bridge-slave ifname “$PROV_CONN” master provisioning

sudo nmcli connection modify provisioning ipv4.addresses 10.10.0.10/24 ipv4.method manual

sudo nmcli con down provisioning

sudo nmcli con up provisioning

export BM_CONN=”eth2″

sudo nmcli con down “$BM_CONN”

sudo nmcli con delete “$BM_CONN”

sudo nmcli con down “System $BM_CONN”

sudo nmcli con delete “System $BM_CONN”

sudo nmcli connection add ifname baremetal type bridge con-name baremetal

sudo nmcli con add type bridge-slave ifname “$BM_CONN” master baremetal

sudo nmcli connection modify baremetal ipv4.addresses 10.20.0.10/24 ipv4.method manual

sudo nmcli con down baremetal

sudo nmcli con up baremetal

- Verify the networking looks similar to this:

[kni@bootstrap ~]$ nmcli con show

NAME UUID TYPE DEVICE

System eth0 5fb06bd0-0bb0-7ffb-45f1-d6edd65f3e03 ethernet eth0

baremetal 7a452607-2196-49d2-a145-6cd760a526eb bridge baremetal

provisioning 6089a4a6-d3d3-4fff-8d99-04ec920d2754 bridge provisioning

bridge-slave-eth1 40e4e145-a948-4832-b51a-9373ef5f0d45 ethernet eth1

bridge-slave-eth2 7f73af05-d3e7-4564-8212-9a66a8f1f347 ethernet eth2

- Create a pull-secret.txt file.

[kni@bootstrap ~]$ vi pull-secret.txt

In a web browser, navigate to Install on Bare Metal with user-provisioned infrastructure, and scroll down to the Downloads section. Click Copy pull secret. Paste the contents into the pull-secret.txt file and save the contents in the kni user’s home directory.

Openshift Installation

- Retrieving OpenShift Installer GA

[kni@bootstrap ~]$ export VERSION=latest-4.7

[kni@bootstrap ~]$ export RELEASE_IMAGE=$(curl -s https://mirror.openshift.com/pub/openshift-v4/clients/ocp/$VERSION/release.txt | grep ‘Pull From: quay.io’ | awk -F ‘ ‘ ‘{print $3}’)

[kni@bootstrap ~]$ export cmd=openshift-baremetal-install

[kni@bootstrap ~]$ export pullsecret_file=~/pull-secret.txt

[kni@bootstrap ~]$ export extract_dir=$(pwd)

[kni@bootstrap ~]$ curl -s https://mirror.openshift.com/pub/openshift-v4/clients/ocp/$VERSION/openshift-client-linux.tar.gz | tar zxvf – oc

oc

[kni@bootstrap ~]$ sudo cp oc /usr/local/bin

[kni@bootstrap ~]$ oc adm release extract –registry-config “${pullsecret_file}” –command=$cmd –to “${extract_dir}” ${RELEASE_IMAGE}

- Verify the installer file has been downloaded

[kni@bootstrap ~]$ ls

GoodieBag nohup.out oc openshift-baremetal-install pull-secret.txt

- Create install-config.yaml.

4. Here is a good template on how your all-in-3 install-config shold look like:

[kni@bootstrap ~]$ vi GoodieBag/install-config.yaml

apiVersion: v1

basedomain: hexo.lab

metadata:

name: “kni-aio”

networking:

machineCIDR: 10.20.0.0/24

networkType: OVNKubernetes

compute:

– name: worker

replicas: 0

controlPlane:

name: master

replicas: 3

platform:

baremetal: {}

platform:

baremetal:

apiVIP: 10.20.0.200

ingressVIP: 10.20.0.201

provisioningNetworkCIDR: 10.10.0.0/24

provisioningNetworkInterface: ens3

hosts:

– name: “kni-worker1”

bootMACAddress: “fa:16:3e:50:10:bf”

#role: worker

rootDeviceHints:

deviceName: “/dev/vda”

hardwareProfile: default

bmc:

address: “ipmi://X.X.X.X”

username: “kni-aio”

password: “Passw0rd”

– name: “kni-worker2”

bootMACAddress: “fa:16:3e:46:fd:6c”

#role: worker

rootDeviceHints:

deviceName: “/dev/vda”

hardwareProfile: default

bmc:

address: “ipmi://X.X.X.X”

username: “kni-aio”

password: “Passw0rd”

– name: “kni-worker3”

bootMACAddress: “fa:16:3e:4c:6f:18”

#role: worker

rootDeviceHints:

deviceName: “/dev/vda”

hardwareProfile: default

bmc:

address: “ipmi://X.X.X.X”

username: “kni-aio”

password: “Passw0rd”

pullSecret: ‘<pull_secret>’

sshKey: ‘<ssh_pub_key>’

5. You pull secret should be available in home directory

(kni-test) [kni@bootstrap ~]$ cat pull-secret.txt

{“auths”:{“cloud.openshift.com”:{“auth”:…..

6. The ssh key should be in your default directory, but feel free to generate on if it’s not there

[kni@bootstrap ~]$ ssh-keygen

[kni@bootstrap ~]$ cat .ssh/id_rsa.pub

7. Openshift Baremetal IPI also requires you to provide DHCP and DNS. This is what I have used in my lab for that”

[kni@bootstrap ~]$ cat GoodieBag/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.20.0.10 bootstrap.kni-aio.hexo.lab bootstrap

10.20.0.31 kni-worker1.kni-aio.hexo.lab kni-worker1

10.20.0.32 kni-worker2.kni-aio.hexo.lab kni-worker2

10.20.0.33 kni-worker3.kni-aio.hexo.lab kni-worker3

10.20.0.200 api.kni-aio.hexo.lab api

10.20.0.10 ns1.kni-aio.hexo.lab ns1

[kni@bootstrap ~]$ cat GoodieBag/kni.dns

domain-needed

bind-dynamic

bogus-priv

domain=kni-aio.hexo.lab

dhcp-range=10.20.0.100,10.20.0.149

dhcp-option=3,10.20.0.1

resolv-file=/etc/resolv.conf.upstream

interface=baremetal

server=10.20.0.10

#Wildcard for apps — make changes to cluster-name (openshift) and domain (example.com)

address=/.apps.kni-aio.hexo.lab/10.20.0.201

#Static IPs for Masters

#dhcp-host=<NIC2-mac-address>,provisioner.openshift.example.com,<ip-of-provisioner>

dhcp-host=fa:16:3e:b4:c4:36,kni-worker1.kni-aio.hexo.lab,10.20.0.31

dhcp-host=fa:16:3e:f6:05:c9,kni-worker2.kni-aio.hexo.lab,10.20.0.32

dhcp-host=fa:16:3e:98:11:00,kni-worker3.kni-aio.hexo.lab,10.20.0.33

[kni@bootstrap ~]$ cat GoodieBag/resolv.conf.upstream

search hexo.lab

nameserver <my_public_dns>

[kni@bootstrap ~]$ cat GoodieBag/resolv.conf

search hexo.lab

nameserver 10.20.0.10

nameserver <my_public_dns>

[kni@bootstrap ~]$ sudo cp GoodieBag/hosts /etc/

[kni@bootstrap ~]$ sudo cp GoodieBag/kni.dns /etc/dnsmasq.d/

[kni@bootstrap ~]$ sudo cp GoodieBag/resolv.conf.upstream /etc/

[kni@bootstrap ~]$ sudo cp GoodieBag/resolv.conf /etc/

[kni@bootstrap ~]$ sudo systemctl enable –now dnsmasq

Created symlink /etc/systemd/system/multi-user.target.wants/dnsmasq.service → /usr/lib/systemd/system/dnsmasq.service.

8. Finally we should be ready to deploy Openshift cluster

Cleanup first if this is not your first attempt

for i in $(sudo virsh list | tail -n +3 | grep bootstrap | awk {‘print $2’});

do

sudo virsh destroy $i;

sudo virsh undefine $i;

sudo virsh vol-delete $i –pool default;

sudo virsh vol-delete $i.ign –pool default;

done

[kni@bootstrap ~]$ rm -rf ~/clusterconfigs/auth ~/clusterconfigs/terraform* ~/clusterconfigs/tls ~/clusterconfigs/metadata.json

- Create working directory

[kni@bootstrap ~]$ mkdir ~/clusterconfigs

- Deploy (in tmux)

[kni@bootstrap ~]$ cp GoodieBag/install-config.yaml clusterconfigs/

[kni@bootstrap ~]$ ./openshift-baremetal-install –dir ~/clusterconfigs –log-level debug create cluster

- You can monitor your deployment in another window

[kni@bootstrap ~]$ sudo virsh list

Id Name State

——————————————-

1 kni-test2-ntpgv-bootstrap running

[kni@bootstrap ~]$ sudo console <bootstrap-vm>

Some other commands that will come in handy in the later state of the deployment

[kni@bootstrap ~]$ export KUBECONFIG=/home/kni/clusterconfigs/auth/kubeconfig

[kni@bootstrap ~]$ oc get clusteroperators

[kni@bootstrap ~]$ oc get nodes

(kni-aio) [kni@bootstrap ~]$ oc get nodes

NAME STATUS ROLES AGE VERSION

kni-worker1 Ready master,worker 49m v1.19.0+2f3101c

kni-worker2 Ready master,worker 48m v1.19.0+2f3101c

kni-worker3 Ready master,worker 48m v1.19.0+2f3101c

- I hope it works! If it has, then at the end of the deployment you will see something like this:

INFO Install complete!

INFO To access the cluster as the system:admin user when using ‘oc’, run ‘export KUBECONFIG=/home/kni/clusterconfigs/auth/kubeconfig’

INFO Access the OpenShift web-console here: https://console-openshift-console.apps.kni-aio.hexo.lab

INFO Login to the console with user: “kubeadmin”, and password: “ss4nv-kmhMP-dM6c7-edSVb”

DEBUG Time elapsed per stage:

DEBUG Infrastructure: 50m41s

DEBUG Bootstrap Complete: 12m39s

DEBUG Bootstrap Destroy: 13s

DEBUG Cluster Operators: 38m12s

INFO Time elapsed: 1h42m24s

Deploying OCS (storage)

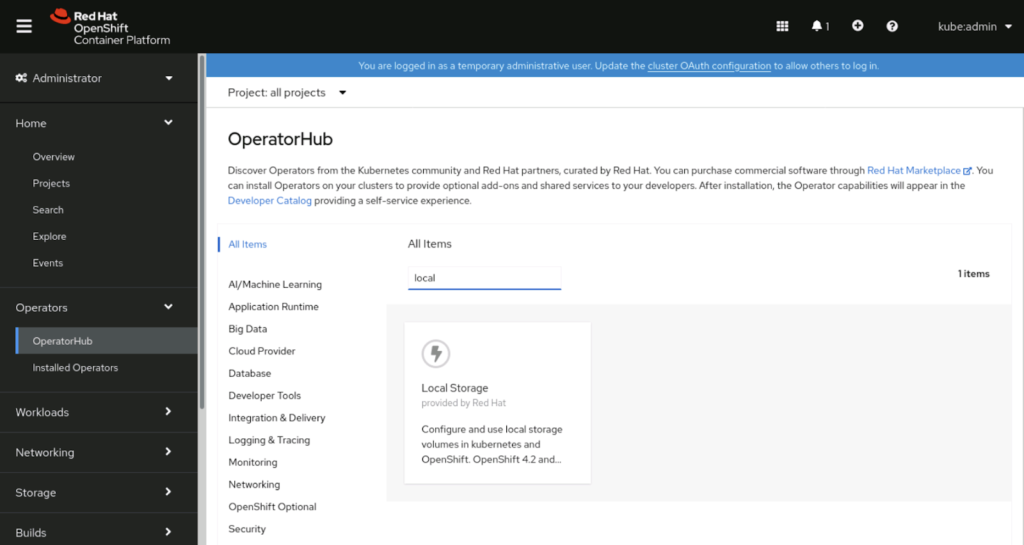

- Install local storage operator

- Keep the defaults and just hit install

- I have preconfigured worker nodes with 250GB secondary drives

[kni@bootstrap ~]$ ssh core@kni-worker1

Red Hat Enterprise Linux CoreOS 46.82.202010301241-0

Part of OpenShift 4.6, RHCOS is a Kubernetes native operating system

managed by the Machine Config Operator (`clusteroperator/machine-config`).

WARNING: Direct SSH access to machines is not recommended; instead,

make configuration changes via `machineconfig` objects:

https://docs.openshift.com/container-platform/4.6/architecture/architecture-rhcos.html

—

[core@kni-worker1 ~]$ sudo fdisk -l | grep vdb

Disk /dev/vdb: 250 GiB, 107374182400 bytes, 209715200 sectors

- Create local disk

[kni@bootstrap ~]$ cat localstorage.yaml

apiVersion: “local.storage.openshift.io/v1”

kind: “LocalVolume”

metadata:

name: “local-disks”

namespace: “openshift-local-storage”

spec:

nodeSelector:

nodeSelectorTerms:

– matchExpressions:

– key: kubernetes.io/hostname

operator: In

values:

– kni-worker1

– kni-worker2

– kni-worker3

storageClassDevices:

– storageClassName: “localblock-sc”

volumeMode: Block

devicePaths:

– /dev/vdb

[kni@bootstrap ~]$ oc create -f localstorage.yaml

- Verify local storage has been create

[kni@bootstrap ~]$ oc get all -n openshift-local-storage

NAME READY STATUS RESTARTS AGE

pod/diskmaker-discovery-jpcxt 1/1 Running 0 6m6s

pod/diskmaker-discovery-lv7m7 1/1 Running 0 6m6s

pod/diskmaker-discovery-x66cs 1/1 Running 0 6m6s

pod/local-disks-local-diskmaker-ccmsx 1/1 Running 0 91s

pod/local-disks-local-diskmaker-gqxm9 1/1 Running 0 92s

pod/local-disks-local-diskmaker-sz9p7 1/1 Running 0 91s

pod/local-disks-local-provisioner-bkt2b 1/1 Running 0 92s

pod/local-disks-local-provisioner-lw7t7 1/1 Running 0 92s

pod/local-disks-local-provisioner-rjt2c 1/1 Running 0 92s

pod/local-storage-operator-fdbb85956-2bb85 1/1 Running 0 10m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/local-storage-operator-metrics ClusterIP 172.30.3.92 <none> 8383/TCP,8686/TCP 9m45s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/diskmaker-discovery 3 3 3 3 3 <none> 6m6s

daemonset.apps/local-disks-local-diskmaker 3 3 3 3 3 <none> 92s

daemonset.apps/local-disks-local-provisioner 3 3 3 3 3 <none> 92s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/local-storage-operator 1/1 1 1 10m

NAME DESIRED CURRENT READY AGE

replicaset.apps/local-storage-operator-fdbb85956 1 1 1 10m

[kni@bootstrap ~]$ oc get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

local-pv-109d302d 250Gi RWO Delete Available localblock-sc 3m45s

local-pv-b5506d82 250Gi RWO Delete Available localblock-sc 3m45s

local-pv-dd42bba8 250Gi RWO Delete Available localblock-sc 3m45s

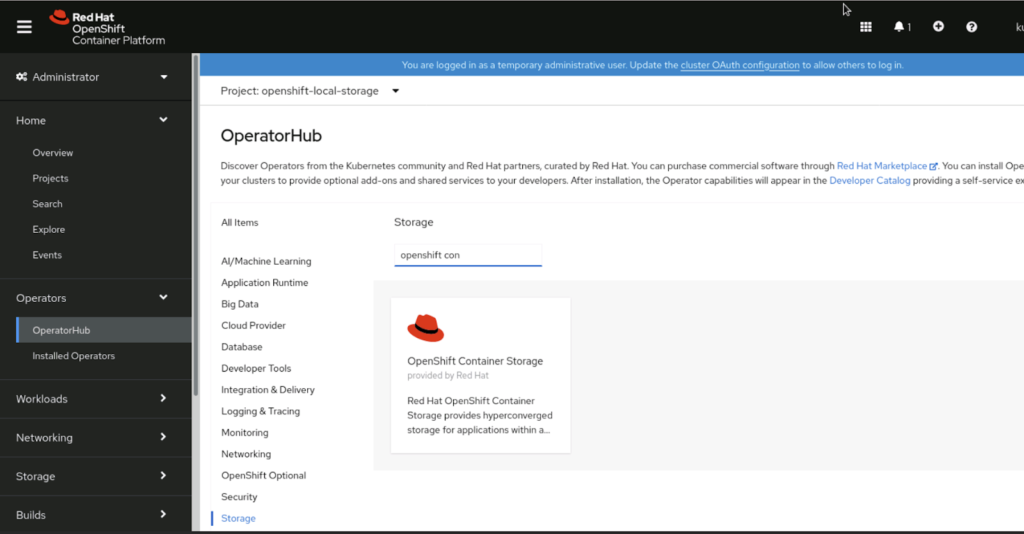

- Install ‘OpenShift Container Storage’ operator (leave all defaults)

- Create OCS cluster

For the All-in-one use case we need to label the nodes so they are available to OCS

for NODE in {1..3};do

oc label nodes kni-worker$NODE cluster.ocs.openshift.io/openshift-storage=”

done

[kni@bootstrap ~]$ cat localstorage.yaml

apiVersion: “local.storage.openshift.io/v1”

kind: “LocalVolume”

metadata:

name: “local-disks”

namespace: “openshift-local-storage”

spec:

nodeSelector:

nodeSelectorTerms:

– matchExpressions:

– key: kubernetes.io/hostname

operator: In

values:

– kni-worker1

– kni-worker2

– kni-worker3

storageClassDevices:

– storageClassName: “localblock-sc”

volumeMode: Block

devicePaths:

– /dev/vdb

[kni@bootstrap ~]$ cat ocs-storage.yaml

apiVersion: ocs.openshift.io/v1

kind: StorageCluster

metadata:

name: ocs-storagecluster

namespace: openshift-storage

spec:

manageNodes: false

monDataDirHostPath: /var/lib/rook

storageDeviceSets:

– count: 1

dataPVCTemplate:

spec:

accessModes:

– ReadWriteOnce

resources:

requests:

storage: 250Gi

storageClassName: localblock-sc

volumeMode: Block

name: ocs-deviceset

placement: {}

portable: false

replica: 3

resources: {}

[kni@bootstrap ~]$ oc create -f ocs-storage.yaml

Configuring container registry on OCS -> https://docs.openshift.com/container-platform/4.7/registry/configuring_registry_storage/configuring-registry-storage-baremetal.html

Configuring CNV -> https://docs.openshift.com/container-platform/4.7/virt/install/preparing-cluster-for-virt.html

Conclusion

It might feel like a lot of steps, but most of the work has been presented in here, so you’re welcome 🙂

At the end you will enjoy really powerful 3 node cluster that can host not just your legacy apps in VMs, but also set you up on the road to running in containers.