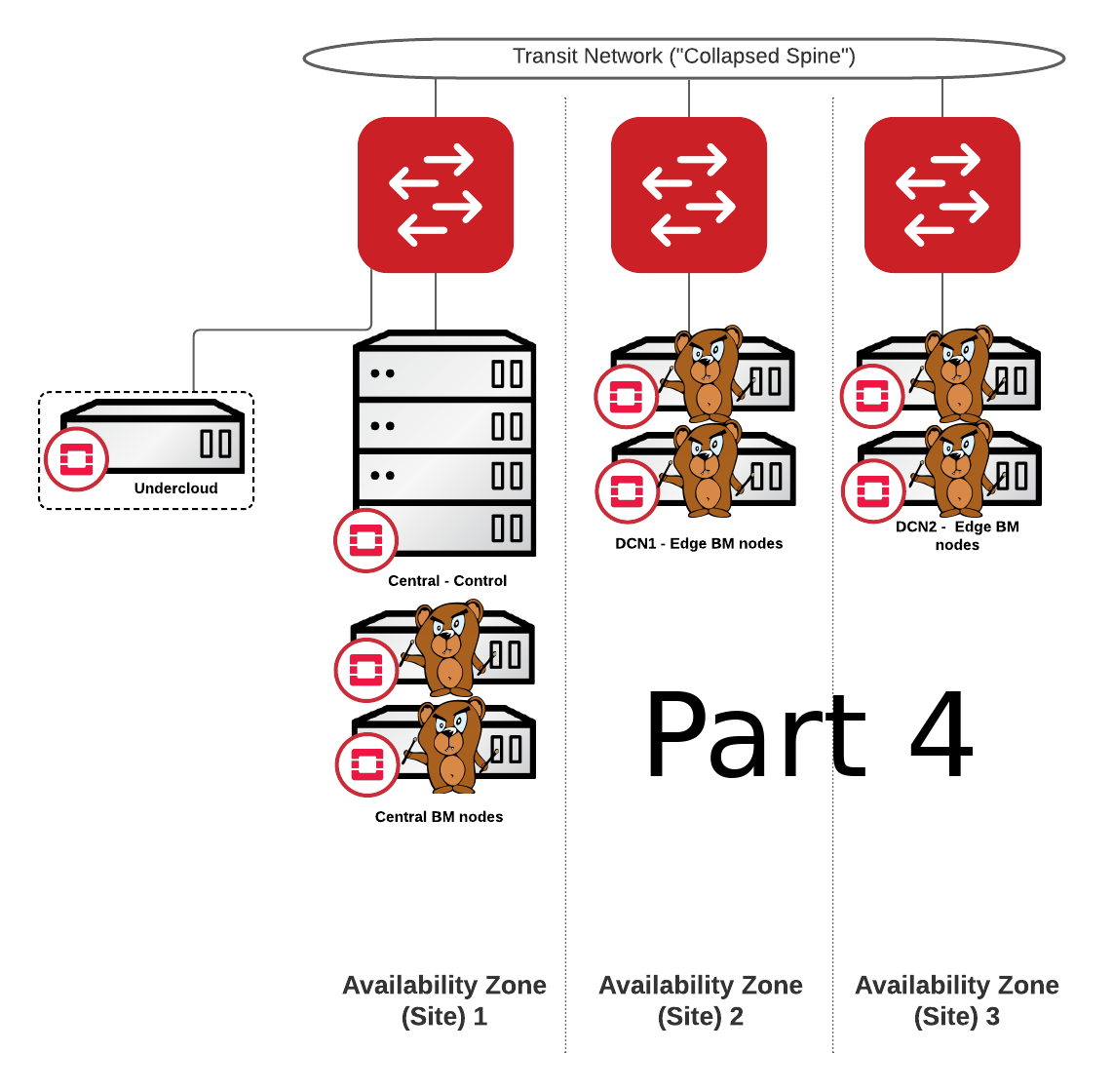

This is part 4 in the series of BareMetal-as-a-Service with Ironic. In this article I will focus on highly distributed architectures. Baremetal cloud as a service for the edge and large environments.

What would be a good use of this architecture? I can think of few examples:

– managing lifecycle of hundreds of individual x86 servers monitoring production of the goods in manufacturing industry

– almost any x86 based IOT use case

– managing highly distributed infrastructure sitting in stores for the large retailers

– managing unreleased server equipment directly at the desks of the engineers <wink>

– running distributed kubernetes clusters

I have meant to document this use case long time ago, since I had it working since OpenStack Queens (OSP13) but I didn’t get to it until now (Train – OSP16.1). This is probably the least documented functionality of Ironic/Neutron. At the same time solves problems of managing any workload on baremetal at the edge (or just distributed over multiple L3 networks for better resiliency).

Here is a quick demo on how it works in practice: https://www.youtube.com/embed/yM8P5TOr1hE

The diagram above describes a high level architecture of my lab, but here are 2 more pieces of information that might be helpful to understand the configs listed below:

| Network Name | IP Address | Description |

| Provisioning | 10.10.0.0/24 | Used to deploy control plane. Can be flat or with multiple segment in case you want to mix OpenStack hypervisors with the baremetal assets |

| Oc-provisioning | 10.60.0.0/24 (seg0) 10.60.1.0/24 (seg1) 10.60.2.0/24 (seg2) | Used to deploy Baremetal Assets. This one should definitely be segmented, but it’s also easy to add new segments |

I. Overcloud Deployment

Deploy script (highlighted use case specific files and changes):

(chrisj-bmaas) [stack@chrisj-bmaas-undercloud ~]$ cat deploy-central.sh

source ~/stackrc

cd ~/

time openstack overcloud deploy –templates –stack chrisj-bmaas \

-n templates/network_data_spine_leaf.yaml \

-r templates/central_roles.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-environment.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/services/ironic.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/services/ironic-inspector.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/services/neutron-ovs.yaml \

-e templates/node-info.yaml \

-e templates/network-environment.yaml \

-e templates/host-memory.yaml \

-e templates/inject-trust-anchor-hiera.yaml \

-e templates/containers-prepare-parameter.yaml \

-e templates/spine-leaf-ctlplane.yaml \

-e templates/spine-leaf-vips.yaml \

-e templates/ironic.yaml \

–log-file chrisj-bmaas_deployment.log \

–ntp-server 10.10.0.10

To network_data_spine_leaf.yaml I have added following section for ironic:

# custom network for overcloud provisioning

– name: OcProvisioning

name_lower: oc_provisioning

vip: true

ip_subnet: ‘10.60.0.0/24’

allocation_pools: [{‘start’: ‘10.60.0.10’, ‘end’: ‘10.60.0.19’}]

gateway_ip: ‘10.60.0.1’

subnets:

oc_provisioning_leaf1:

ip_subnet: ‘10.60.1.0/24’

allocation_pools: [{‘start’: ‘10.60.1.20’, ‘end’: ‘10.60.1.30’}]

gateway_ip: ‘10.60.1.1’

oc_provisioning_leaf2:

ip_subnet: ‘10.60.2.0/24’

allocation_pools: [{‘start’: ‘10.60.2.20’, ‘end’: ‘10.60.2.30’}]

gateway_ip: ‘10.60.2.1’

To central_roles.yaml I have added following for controller nodes:

– name: Controller

description: |

Controller role that has all the controler services loaded and handles

Database, Messaging and Network functions.

CountDefault: 1

tags:

– primary

– controller

networks:

External:

subnet: external_subnet

InternalApi:

subnet: internal_api_subnet

Storage:

subnet: storage_subnet

StorageMgmt:

subnet: storage_mgmt_subnet

Tenant:

subnet: tenant_subnet

OcProvisioning:

subnet: oc_provisioning_subnet

In node_info.yaml (we are only deploying control plane):

parameter_defaults:

OvercloudControllerFlavor: control

OvercloudComputeFlavor: compute

OvercloudComputeLeaf1Flavor: compute-leaf1

OvercloudComputeLeaf2Flavor: compute-leaf2

ComputeLeaf1Count: 0

ComputeLeaf2Count: 0

#OvercloudCephStorageFlavor: ceph-storage

ControllerCount: 3

ComputeCount: 0

#CephStorageCount: 0

Ironic.yaml has all the remaining variables:

(chrisj-bmaas) [stack@chrisj-bmaas-undercloud ~]$ cat templates/ironic.yaml

parameter_defaults:

SkipRhelEnforcement: true

AdminPassword: <secret>

NetworkDeploymentActions: [‘CREATE’,’UPDATE’]

IronicCleaningDiskErase: metadata

IronicIPXEEnabled: true

IronicCleaningNetwork: oc_provisioning

IronicProvisioningNetwork: oc_provisioning

IronicInspectorSubnets:

– ip_range: 10.60.0.100,10.60.0.129

tag: segment0

netmask: 255.255.255.0

gateway: 10.60.0.1

– ip_range: 10.60.1.100,10.60.1.129

tag: segment1

netmask: 255.255.255.0

gateway: 10.60.1.1

– ip_range: 10.60.2.100,10.60.2.129

tag: segment2

netmask: 255.255.255.0

gateway: 10.60.2.1

IPAImageURLs: ‘[“http://10.10.0.10:8088/agent.kernel”, “http://10.10.0.10:8088/agent.ramdisk”]’

IronicInspectorInterface: br-baremetal

IronicInspectorEnableNodeDiscovery: true

IronicInspectorCollectors: default,extra-hardware,numa-topology,logs

ServiceNetMap:

IronicApiNetwork: oc_provisioning

IronicNetwork: oc_provisioning

IronicInspectorNetwork: oc_provisioning

IronicProvisioningNetwork: oc_provisioning

ControllerExtraConfig:

ironic::inspector::add_ports: all

NeutronMechanismDrivers: [‘openvswitch’, ‘baremetal’]

II. Post Deploy work and validations

After successful overcloud deployment I have executed following post-deploy.sh script:

(chrisj-bmaas) [stack@chrisj-bmaas-undercloud ~]$ cat post-deploy.sh

source ~/chrisj-bmaasrc

openstack aggregate create –property baremetal=true baremetal-hosts

for i in chrisj-bmaas-controller-0.localdomain chrisj-bmaas-controller-1.localdomain chrisj-bmaas-controller-2.localdomain ; do openstack aggregate add host baremetal-hosts $i ; done

openstack flavor create –id auto –ram 4096 –vcpus 1 –disk 8 –property baremetal=true –property resources:CUSTOM_BAREMETAL=1 –property resources:VCPU=0 –property resources:MEMORY_MB=0 –property resources:DISK_GB=0 –public baremetal

openstack keypair create –public-key ~/.ssh/id_rsa.pub stack

cd ~

mkdir images

tar -xvf /usr/share/rhosp-director-images/ironic-python-agent-latest.tar -C images/

cd images

openstack image create \

–container-format aki \

–disk-format aki \

–public \

–file ./ironic-python-agent.kernel bm-deploy-kernel

openstack image create \

–container-format ari \

–disk-format ari \

–public \

–file ./ironic-python-agent.initramfs bm-deploy-ramdisk

cd ~

mkdir images2

tar -xvf /usr/share/rhosp-director-images/overcloud-full-latest.tar -C images2/

cd images2

virt-customize -a overcloud-full.qcow2 –root-password password:Passw0rd

KERNEL_ID=$(openstack image create \

–file overcloud-full.vmlinuz –public \

–container-format aki –disk-format aki \

-f value -c id overcloud-full.vmlinuz)

RAMDISK_ID=$(openstack image create \

–file overcloud-full.initrd –public \

–container-format ari –disk-format ari \

-f value -c id overcloud-full.initrd)

openstack image create –file overcloud-full.qcow2 –public –container-format bare –disk-format qcow2 –property kernel_id=$KERNEL_ID –property ramdisk_id=$RAMDISK_ID rhel8-bm

cd ~

openstack network create oc_provisioning –provider-network-type flat –provider-physical-network baremetal –share –mtu 1500

SID=$(openstack network segment list –network oc_provisioning -c ID -f value)

openstack network segment set –name segment0 $SID

openstack network segment create –network oc_provisioning –physical-network segment1 –network-type flat segment1

openstack network segment create –network oc_provisioning –physical-network segment2 –network-type flat segment2

openstack subnet create –network-segment segment0 –network oc_provisioning –subnet-range 10.60.0.0/24 –dhcp –allocation-pool start=10.60.0.150,end=10.60.0.199 –gateway 10.60.0.1 oc_provisioning-seg0

openstack subnet create –network-segment segment1 –network oc_provisioning –subnet-range 10.60.1.0/24 –dhcp –allocation-pool start=10.60.1.150,end=10.60.1.199 –gateway 10.60.1.1 oc_provisioning-seg1

openstack subnet create –network-segment segment2 –network oc_provisioning –subnet-range 10.60.2.0/24 –dhcp –allocation-pool start=10.60.2.150,end=10.60.2.199 –gateway 10.60.2.1 oc_provisioning-seg2

openstack port list –network oc_provisioning –device-owner network:dhcp -c “Fixed IP Addresses”

Added new ironic nodes manually with following bm_nodes.yaml file:

(chrisj-bmaas) [stack@chrisj-bmaas-undercloud ~]$ cat bm_nodes.yaml

nodes:

– ports:

– address: “fa:16:3e:5e:f5:57”

physical_network: “segment2”

name: “overcloud_bm2_leaf2”

properties:

cpu_arch: “x86_64”

cpu: 4

memory_mb: 12288

local_gb: 60

driver_info:

ipmi_address: “10.70.0.161”

ipmi_username: “chrisj-bmaas”

ipmi_password: “Passw0rd”

driver: ipmi

resource_class: baremetal

– ports:

– address: “fa:16:3e:f1:2a:f0”

physical_network: “segment2”

name: “overcloud_bm1_leaf2”

properties:

cpu_arch: “x86_64”

cpu: 4

memory_mb: 12288

local_gb: 60

driver_info:

ipmi_address: “10.70.0.52”

ipmi_username: “chrisj-bmaas”

ipmi_password: “Passw0rd”

driver: ipmi

resource_class: baremetal

– ports:

– address: “fa:16:3e:14:e9:49”

physical_network: “segment1”

name: “overcloud_bm2_leaf1”

properties:

cpu_arch: “x86_64”

cpu: 4

memory_mb: 12288

local_gb: 60

driver_info:

ipmi_address: “10.70.0.250”

ipmi_username: “chrisj-bmaas”

ipmi_password: “Passw0rd”

driver: ipmi

resource_class: baremetal

– ports:

– address: “fa:16:3e:0c:1a:33”

physical_network: “segment1”

name: “overcloud_bm1_leaf1”

properties:

cpu_arch: “x86_64”

cpu: 4

memory_mb: 12288

local_gb: 60

driver_info:

ipmi_address: “10.70.0.130”

ipmi_username: “chrisj-bmaas”

ipmi_password: “Passw0rd”

driver: ipmi

resource_class: baremetal

– ports:

– address: “fa:16:3e:34:fa:bb”

physical_network: “baremetal”

name: “overcloud_bm2_leaf0”

properties:

cpu_arch: “x86_64”

cpu: 4

memory_mb: 12288

local_gb: 60

driver_info:

ipmi_address: “10.70.0.145”

ipmi_username: “chrisj-bmaas”

ipmi_password: “Passw0rd”

driver: ipmi

resource_class: baremetal

– ports:

– address: “fa:16:3e:0e:0b:31”

physical_network: “baremetal”

name: “overcloud_bm1_leaf0”

properties:

cpu_arch: “x86_64”

cpu: 4

memory_mb: 12288

local_gb: 60

driver_info:

ipmi_address: “10.70.0.88”

ipmi_username: “chrisj-bmaas”

ipmi_password: “Passw0rd”

driver: ipmi

resource_class: baremetal

Finally I have assigned kernel and ramdisk to newly created BM nodes with the command:

KERNEL_UUID=$(openstack image show bm-deploy-kernel -f value -c id)

INITRAMFS_UUID=$( openstack image show bm-deploy-ramdisk -f value -c id)

for i in `openstack baremetal node list | awk ‘/enroll/ { print $2 }’`; do openstack baremetal node set $i –driver-info deploy_kernel=$KERNEL_UUID –driver-info deploy_ramdisk=$INITRAMFS_UUID; done

Now my BM cloud is ready and I can test it with following:

(chrisj-bmaas) [stack@chrisj-bmaas-undercloud ~]$ openstack baremetal node list

+————————————–+———————+—————+————-+——————–+————-+

| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |

+————————————–+———————+—————+————-+——————–+————-+

| 3963901f-fe91-4402-bb14-aed6f2163d4f | overcloud_bm2_leaf2 | None | None | enroll | False |

| 9e776aee-7829-4a37-bb8a-955e61f96c4e | overcloud_bm1_leaf2 | None | None | enroll | False |

| 7b8635ab-3c3e-40a2-9e9b-4983f1447082 | overcloud_bm2_leaf1 | None | None | enroll | False |

| 697ac471-8b8b-4156-8a31-95493bc5c226 | overcloud_bm1_leaf1 | None | None | enroll | False |

| d3fbc4f2-5c3e-426b-8b73-69b45b2dafa3 | overcloud_bm2_leaf0 | None | None | enroll | False |

| 04b2fd43-133c-44e0-a471-789fbda637ce | overcloud_bm1_leaf0 | None | None | enroll | False |

+————————————–+———————+—————+————-+——————–+————-+

(chrisj-bmaas) [stack@chrisj-bmaas-undercloud ~]$ for i in $(openstack baremetal node list | awk ‘/enroll/ {print $2}’); do openstack baremetal node manage $i; done

(chrisj-bmaas) [stack@chrisj-bmaas-undercloud ~]$ openstack baremetal node list

+————————————–+———————+—————+————-+——————–+————-+

| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |

+————————————–+———————+—————+————-+——————–+————-+

| 3963901f-fe91-4402-bb14-aed6f2163d4f | overcloud_bm2_leaf2 | None | power off | manageable | False |

| 9e776aee-7829-4a37-bb8a-955e61f96c4e | overcloud_bm1_leaf2 | None | power off | manageable | False |

| 7b8635ab-3c3e-40a2-9e9b-4983f1447082 | overcloud_bm2_leaf1 | None | power off | manageable | False |

| 697ac471-8b8b-4156-8a31-95493bc5c226 | overcloud_bm1_leaf1 | None | power off | manageable | False |

| d3fbc4f2-5c3e-426b-8b73-69b45b2dafa3 | overcloud_bm2_leaf0 | None | power off | manageable | False |

| 04b2fd43-133c-44e0-a471-789fbda637ce | overcloud_bm1_leaf0 | None | power off | manageable | False |

+————————————–+———————+—————+————-+——————–+————-+

(chrisj-bmaas) [stack@chrisj-bmaas-undercloud ~]$ for i in $(openstack baremetal node list | awk ‘/manageable/ {print $2}’); do openstack baremetal node provide $i; done

(chrisj-bmaas) [stack@chrisj-bmaas-undercloud ~]$ openstack baremetal node list

+————————————–+———————+—————+————-+——————–+————-+

| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |

+————————————–+———————+—————+————-+——————–+————-+

| 3963901f-fe91-4402-bb14-aed6f2163d4f | overcloud_bm2_leaf2 | None | power off | available | False |

| 9e776aee-7829-4a37-bb8a-955e61f96c4e | overcloud_bm1_leaf2 | None | power off | available | False |

| 7b8635ab-3c3e-40a2-9e9b-4983f1447082 | overcloud_bm2_leaf1 | None | power off | available | False |

| 697ac471-8b8b-4156-8a31-95493bc5c226 | overcloud_bm1_leaf1 | None | power off | available | False |

| d3fbc4f2-5c3e-426b-8b73-69b45b2dafa3 | overcloud_bm2_leaf0 | None | power off | available | False |

| 04b2fd43-133c-44e0-a471-789fbda637ce | overcloud_bm1_leaf0 | None | power off | available | False |

+————————————–+———————+—————+————-+——————–+————-+

I have skipped inspection, but that functionality is also available with the current configuration as long as the proper DHCP relays are being configured.